Introduction

There are many companies creating technologies to make the user feel like they are in the game. Some of these are: Microsoft's Kinect, the Nintendo Wii, and the Playstation Move. Kinect’s approach is to use a combination of image and range sensors to interpret movement that the virtual character mimics. The Wii uses another approach where controllers track hand motion so the users feels like they can interact with the game. The Playstation Move is a combination of the two using both an image sensor and motion sensing controllers. These technologies are important because they show the current marketing trend to bring the users into the environments. The problem with all of these systems is that the only visual information comes from the Television which is in a stationary position in front of the user.

Immersify solves this problem by utilizing its main peripheral: the HMD. When the user turns around the display is still in front of them. Through the use of head tracking Immersify can also also change the point of view in the HMD, allowing the user to see what their virtual character would. The user can look around in the 3D environment as if they were actually there and is able to control their characters as an extensions of themselves. We intend to use the framework we create to build a game specifically designed to take full advantage of all that the Immersify hardware can accomplish, which can be used as an example or prototype of how interactive and innovative controls can enhance games. It is our hope that through Immersify the user becomes a part of the experience and not just an observer.

The Immersify team has obtained all of the necessary hardware components: HMD, controller, and the two motion sensors. With these available the team has been able to create a basic framework that allows the data provided by the motion sensors to be converted to information that a Windows computer recognizes as inputs. Existing games are able to use our Immersify framework in the form of the input mapper which converts the data into keystrokes and mouse movements. However we are going to create an Immersify controller that will greatly increase the number of actions available to the user. We are also going to create a game specifically designed to take full advantage of our Immersify peripherals.

Components

Phidget Sensors

Currently we use two Phidget 3/3/3 sensors to track head and arm motion. These sensors are fitted with accelerometers for tracking spatial acceleration, gyroscopes to track angular acceleration, and 3D compases to track spatial orientation through the use of magnetic fields.

Controller

The controller (for now) is a modified Wii nunchuck coupled with a sensor. This controller is integrated with a microcontroller, and then mapped via a USB port to a virtual com port.

Immersify Framework

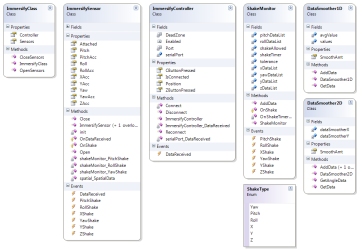

The Immersify Framework is the heart of the Immersify system. Its function is not only to translate the raw data coming from the sensors into data that is directly usable by other software, but also to perform data cleansing and data “smoothing” algorithms. It contains functions that carry out all the operations involved with dealing and monitoring the sensors. It's divided into 3 pieces: the Immersify Class, the Data Smoother and the Shake Monitor. The Immersify Class that encapsulates the Immersify hardware as a whole, and provides accessibility to data received from its hardware peripherals. The Data Smoother takes averages of the raw values provided by the sensor to remove the impact of anomalous or outlaying data. This ensure the accuracy of movements read from the sensors. The Shake Monitor listens for a specific sequence of events to translate into a desired action.

Demo Simulations

Immersify includes a demo that shows some ideas of what might be possible using the Immersify Framework. After launching the demo, the user appears in an outdoor room with two billboards in front of them that they can choose from:

Paintsphere is a 3D painting program in which you can use your head to look around, and paint on the walls of the spherical room using your arm motions. You can select different colors and change the brush size using the Immersify controller.

Darkroom is a demo where you can use your arm motions to control a flashlight beam that illuminates a dark room that you are positioned in. You can also use the Immersify controller's joystick to walk around, and this demo features 3D collision.

InputHandler

The function of the Input Handler is to basically take the data from the Immersify Framework and to then translate it into keystrokes or mouse movements based on a configuration file. It also allows the user to change sensitivities of sensors, as well as map “gestures” from the secondary sensor (as in, shaking the secondary sensor up and down) to key presses. This allows Immersify to operate within any game. It can also be used to save and load settings to keep profiles for different users or applications.

TestApp

The TestApp was used for debugging purposes and gives a visual representation of the data provided by the sensors. The data from the sensors are given meaning through the use of several graphs and plots. This is used to interperate physical interaction with the sensors.

Immersify strives to immerse the user into the virutal environment further than ever before using current platforms. Watch it in action here.